Quick facts

Background

My Role

Freelance Product Designer

Duration

5 months

(Aug 2021 - Jan 2022)

Team Members

Product Manager

UX Researcher

Scrum Master

QA Engineer

Data Scientist

Developers

Toolbox

Figma

Dovetail

UserZoom

HotJar

Jira & Confluence

Deliverables

User Journey Map

Competitive Analysis

Screen Flows

Mockups

Usability Testing

Hi-fi Prototype

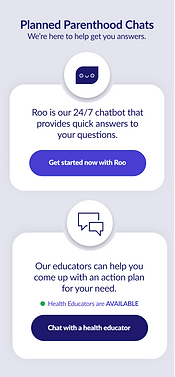

Planned Parenthood provides a wealth of resources and knowledge for sexual and reproductive health. Two of those resources include chat-based products – Roo and Chat/Text. Roo is an AI-based chatbot intended to quick, simple questions while Chat/Text is the organization’s long-standing Human-powered chat service geared for more in-depth conversations. Both services had been successful since their launch with over 8 million users getting their questions answered.

The challenge

There was no clear distinction between the two products which led to confusion on which product was best for certain needs. Due to the limited number of health educators available to chat with users, Roo began taking on more in-depth queries and was unable to provide sufficient answers. More users began abandoning their journey because no health educators were available and alternate steps were unclear.

My tasks

When I joined, there had recently been a release called Unified Onboarding. This was the first version of combining both chat products into one service. I was given a walk-through of the current experience along with necessary files and documents from the previous designer. The team had now enlisted my expertise in several key areas to meet their KPIs and success metrics for the next quarter:

%20(1).png)

Improve...

Product branding to better guide users to the most appropriate chat option(s) based on their needs.

Patient wayfinding experience and conversion to Roo by 30% if health educators are unavailable.

%20(1).png)

Reduce...

Friction that may occur when a user goes through the Roo or health educator journey.

Abandonment by 35% from users utilizing Roo and/or seeking a health educator.

.png)

Uncovering the data

I reviewed all the data, analysis, and feedback that had been collected up until the point of joining the product. There were plenty of insights but none of the feedback from the most recent testing had been synthesized and interpreted. I dove head first into making sense of what was in front of me and began gaining a deeper understanding.

After reviewing the data and research, I created a journey map of the current experience for the product. I already brainstormed several possible ideas and jotted down some follow-up questions for the team. While mapping, I focused on the scenario of a user wanting to ask a human (aka a health educator) a specific question.

From here, I was able to depict what users were encountering and why they felt certain ways. I raised my concerns to the team and asked questions to understand what was absolutely necessary for the business needs while also not letting that overshadow the users' needs.

Checking out the competition

Now that I began brainstorming on ways to fix the current experience, I couldn't help to think about how other conversational apps were onboarding their users and what could we learn from them. I researched and compared 4 other chat products in a similar field, created a competitive analysis, and presented my findings to our team.

Auditing the existing experience

Using the existing experience as my starting ground, I performed an audit on each screen. I incorporated my proposed redesigns that implemented UX improvements across key focus areas. In a team design review, I provided a walk-through of my annotations, rationale, and proposed designs.

Major improvements

In my redesigns, I created a variety of user-focused improvements but two were particularly noteworthy because I felt they would bring the greatest value to the user’s journey and would directly impact the project’s KPIs.

The first was what I called the ‘Health Educator Status Indicator’. This update informed users of health educator availability on the first screen vs the fifth screen (current user journey). Additionally, users were presented with alternative options sooner.

%20(1).png)

The second major improvement was the new simplified demographic question screen. In the existing experience, users answered demographic questions across 3 different screens. My redesign combined 3 screens into 1, increasing efficiency and decreasing the opportunity for user abandonment at this stage.

CURRENT

PROPOSED

Bringing it all together

To better communicate the new user experience of my redesigns, I drafted screen flows for my mockups. The team appreciated the visual aid and it further helped to provide an understanding of how my recommendations would improve a user's journey.

.png)

After creating screen flows of the user journeys, it was time to add in some interactions. I designed high-fidelity prototypes that captured 3 use cases:

Health educators are available

Health educators are busy

Health educators are offline

Testing with real users

I recruited 20 participants to perform unmoderated usability tests via UserZoom where participants were asked to think aloud as they read prompts and completed tasks using my prototype. Below are some demographic data points and key user feedback:

"I think it was straightforward and I could see that there were 2 places that I could go."

"The status signs letting you know if the health educator is busy is really cool. That way I don't have to waste time if I want to talk to one and they're not available."

"I like it as far as asking questions but I think there should be some more graphics or something. Just feels like it's missing a something."

"I forgot that Roo was actually the chatbot so that kinda threw me off a little. It'd be nice to call that out."

Iterating & ideating

I received a lot of valuable feedback from the usability test sessions and was happy that many of my concerns were validated. I captured the significant user inputs and incorporated them into my designs. During this time, the product name and home screen design were two major updates that I needed collective and immediate feedback on prior to another round of testing. Unified Onboarding didn't seem to particularly resonate with users and I learned from the previous testing that there was a desire for more graphics. I knew I wanted to A/B test a couple of ideas with users but first needed to narrow down my decisions.

As a result, I created a Figjam and invited the Product Design org to share their feedback. Firstly, I organized an open voting area on the board for product name ideation. After a few days, I wrapped up voting and we collectively landed on Planned Parenthood Chats as our new name. Following our name decision, I applied it to my 5 home screen mockups and allowed the org to now vote on their favorite home screen design.

Runner-up

Now I was ready to conduct another round of testing to see how all the updates affected the user's journey. Using UserZoom again, I recruited 15 additional participants to gather updated feedback on task efficiency, clarity, and overall design. I also asked users about their favorite home screen design between the top two voted choices. The majority of users selected the same design that our teams selected; however, multiple users mentioned how they liked the darker color of the header in the second option. With that feedback, I created a home screen design that combined both favorites.

Second iteration also included:

-

More inclusiveness: Added two additional gender selections on the demographic screen

-

Increased "fun": Additional micro-interactions and animations

-

Reduced cognitive load: Removing non-essential input fields

-

Minimalist design: Shorter, more concise copy on screens

TOP 2 FAVORITES

HAPPY MEDIUM

Maintaining the design system

There was already a design system established so I updated and maintained it, adding my new elements to it and updating several of the components based on improvements.

.png)

%20(1).png)

Revealing the final product

Planned Parenthood Chats was successfully deployed to millions of users nationwide. The merge of chat services was vital to the organization's vision of keeping its brand and offerings consistent. Ecstatic to have been a part of a solution that provides real-life answers to teens and young adults!

Measuring our success

Shortly after Planned Parenthood Chat’s launch, our Data Analytics team began quickly gathering data, metrics, and feedback from our users to see how the new design was impacting their experience. I was overjoyed to learn the following:

57%

increase in Roo conversions

during Health Educator Offline or Busy statuses

(KPI goal: 30% increase)

43%

reduction in total number of users abandoning the journey with Roo and educators

(KPI goal: 35% decrease)

_edited.png)

88%

of users felt 'very' or 'somewhat' confident about knowing which product to select for their needs

Looking back

The health educator status indicator was one of the highest value-added features I created in my redesign. However, it was also one of the most challenging for the devs to implement. The main issue was the frequency at which the back end checked & updated the status indicator to display on the front end. I remember us being on hold for two weeks because we weren't sure if it was ever going to consistently pull and display the most accurate data. But the devs were able to work with the vendor to pull the data and get it figured out in the end!

.png)